Backpropagation Explained: The Art of Perfecting Recipes

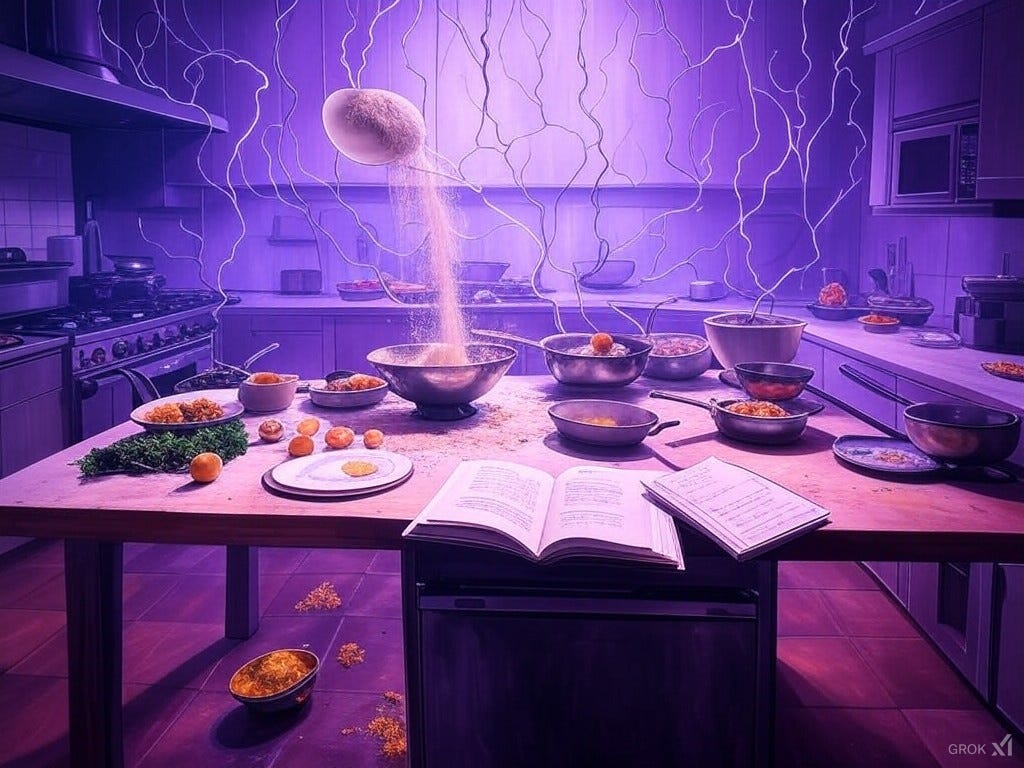

Imagine you possess the world's largest cookbook, containing millions upon millions of recipes - every dish known to humanity is in there, from the simplest scrambled eggs to the most exotic fusion cuisine. This cookbook is our analogy for an AI model like Grok, designed to generate or predict outcomes based on what you ask for. Now, let's explore how this cookbook gets better over time through a process called backpropagation, which is akin to refining and updating recipes.

The Recipe Book Analogy

The Model as a Cookbook: Each recipe in this book corresponds to a potential output or prediction the model can make. When you ask the model to generate something, you're essentially asking for a recipe based on your preferences - the taste, texture, and outcome you desire.

User Prompt: When you specify what kind of dish you want to cook, describing its taste and desired result, this is like providing the model with a prompt. You might say, "I want something sweet, light, and refreshing," and the model searches its vast collection for a matching recipe.

Forward Propagation: The Initial Guess

Forward Pass: This is when the model (our cookbook) uses its current knowledge (recipes) to generate a dish based on your prompt. It's like the cookbook suggesting, "Try this Lemon Sorbet recipe."

Tasting the Result: Once you've cooked the dish following the recipe, you taste it. This step corresponds to the Loss Function in AI training. The taste is how we evaluate how close the dish came to your expectations. Was it too sweet? Not refreshing enough?

Backpropagation: The Recipe Refinement

Taste Feedback: If the dish doesn't quite match what you were hoping for, this feedback is crucial. In AI terms, this feedback is the loss.

Updating Recipes: Here's where backpropagation comes into play:

Gradients as Guidance: Think of gradients as a taste critique. They tell you not just that something is off, but specifically how off it is and in what direction you should adjust. For instance, if the sorbet is too sour, the gradient would guide you to reduce the lemon juice or add more sugar.

Updating the Cookbook: Based on the gradients (taste feedback), you might:

Modify Ingredients: Change the amount of lemon, sugar, or water in the recipe.

Adjust Cooking Techniques: Perhaps the sorbet needs to be churned longer or less.

Create New Recipes: If no existing recipe fits, you might develop a new one, adding it to the book.

Incremental Changes: The adjustments might be significant if the taste was far from perfect, or subtle if it was close but not quite there. This is akin to how much the weights in a neural network are updated - some might need a big tweak, others just a nudge.

Only Through Backpropagation: Just like you wouldn't change your recipes without tasting the dish first, the AI model doesn't update its 'recipes' (weights) without going through backpropagation. This process uses the calculated gradients to determine which parts of the network (or recipes) need adjusting and how.

The Cycle Continues

Iterative Improvement: Each time you try a recipe, taste it, and refine it, you're improving your cookbook. Similarly, with each batch of data processed by the model, the forward pass, loss calculation, and backpropagation cycle refines the model's ability to generate the right 'recipe' for any given prompt.

Learning from Experience: Just as a chef learns from cooking, the AI model learns from its predictions, getting better at understanding and meeting user requests.

Conclusion

Backpropagation is the backbone of how AI models like Grok learn and improve. In our analogy, it's the method by which a vast, ever-evolving cookbook becomes more adept at creating dishes that match your desires. By tasting the results of its predictions and adjusting based on that feedback, the model - or our cookbook - ensures that the next time you ask for a recipe, it's closer to perfection. This process of tasting, critiquing, and adjusting is what makes AI not just a store of knowledge but a dynamic, learning entity.