Deciphering the Language of the Ancients: How Self-Attention Works in LLMs

How the Q, K, and V matrices are used during the self attention mechanism to decipher the interrelationship between words (tokens) in a sequence.

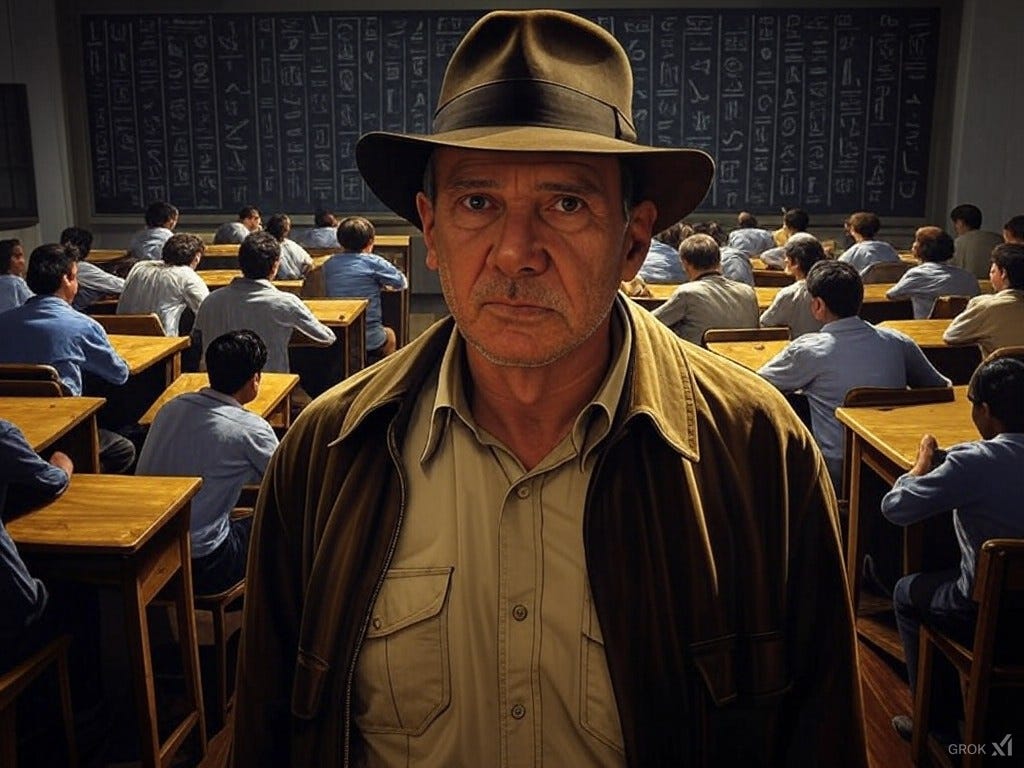

Imagine you've joined the legendary archaeologist, Professor Indiana Jones, on his latest adventure. He's unearthed a sequence of novel hieroglyphics from an ancient Egyptian site, a puzzle that promises secrets about a legendary medallion. But here's the twist: neither Indiana nor anyone else understands these symbols. The riddle before us: Are these hieroglyphics telling a tale of a king burying a valuable medallion, being buried with one, or does the medallion serve as a tool for digging?

This scenario mirrors the challenge faced by Large Language Models (LLMs) when processing sequences of words or tokens. Just like these hieroglyphics, we start with a set of vectors representing words in a sequence. The first hurdle to overcome, before any action or prediction can be made, is to understand the interrelationship between these tokens.

The Need for Contextual Awareness

When you pose a question to an LLM, the model begins by trying to understand the context or the relationships between the words in your query. This is where self-attention comes into play, much like Indiana Jones needing to decipher the hieroglyphics before embarking on his quest for the medallion.

In our analogy, before Indiana can venture into the world to find the medallion (akin to the Feed Forward Network or FFN where world knowledge is applied), he must first understand what the hieroglyphic sequence is saying. He does this by leveraging three specialized classrooms, each contributing uniquely to this understanding:

1. Theoretical Linguistics Classroom:

Role: Focuses on the core concepts of the language. Students here ponder, "What could these symbols mean?"

Function: They determine which symbols or combinations are crucial for understanding, much like focusing on what parts of the sequence need attention.

Visual: Imagine this classroom as a vast grid of desks, each student equipped with a different piece of knowledge or approach to deciphering hieroglyphics.

2. Practical Decoding Classroom:

Role: Students here, with cryptographic and archaeological expertise, explore how symbols connect or relate within the sequence. They ask, "How do these symbols interact within the text?"

Function: They establish connections or patterns, similar to matching queries with relevant parts of the data.

Visual: Another grid of desks, where each student's unique skill set helps in interpreting the practical implications of the hieroglyphics.

3. Critical Analysis Classroom:

Role: This classroom delves into why certain combinations of symbols might be significant, pondering cultural or symbolic meanings.

Function: They refine the context by providing depth or alternative interpretations, much like enriching the understanding of the sequence.

Visual: Each student in their desk, analyzing the hieroglyphics from a cultural or symbolic perspective, transforming raw symbols into meaningful insights.

The Magic Behind the Classrooms: The Weight Matrices

Each hieroglyphic or token in our sequence is represented by a vector in a high-dimensional space, known as a hidden state vector. These vectors capture the initial essence or meaning of each symbol before further interpretation.

These classrooms metaphorically represent static weight matrices (W_q, W_k, W_v) in the self-attention mechanism of LLMs. Here's the technical detail:

Static Weights: The knowledge or teaching approach in these classrooms (W_q, W_k, W_v) doesn't change after training; they are the weights that, once learned, remain fixed for inference. However, for each new hieroglyphic sequence, they produce unique reports (Q, K, V matrices) based on the sequence's hidden states.

Dynamic Matrices: When a new set of hieroglyphics (or a sequence of tokens) comes in, these static weights interact with the hidden state vectors to produce:

Q Matrix: From W_q, helping to focus attention on relevant parts of the sequence.

K Matrix: From W_k, matching queries to relevant parts of the sequence.

V Matrix: From W_v, providing the actual content or transformation based on the context.

The Math: Simply put, each classroom's output is generated by multiplying the hidden state matrix (the collective of hidden state vectors) by their respective weight matrices:

Q = hidden_states * W_q

K = hidden_states * W_k

V = hidden_states * W_v

Think of this multiplication like each student in the classroom (weight) reading a unique aspect of the hieroglyphics (hidden state) to produce a new report (Q, K, or V matrix) tailored to that sequence.

Size Context: For models like Grok-1, each classroom (weight matrix) can be as large as 6,144 by 6,144, but this size can vary depending on the model's architecture and the layer in question. The size of these matrices (Q, K, V) also depends on how many hieroglyphics or tokens are in the sequence, adapting dynamically to the input's length.

Attention Scores: Only the 'reports' from the Theoretical and Practical classrooms (Q and K) are used together to compute attention scores. These scores dictate how much one hieroglyphic should pay attention to another, much like Indy decides how to connect the dots on his chalkboard. The Critical Analysis classroom's report (V) comes into play after this step.

Weighted Sum: After computing the attention scores, they're applied to the Value matrix (V). This can be thought of as Professor Jones using the insights from how symbols relate (attention scores) to decide how to weigh the interpretations or transformations provided by the Critical Analysis classroom (V), effectively updating his understanding of each hieroglyphic.

Bringing It All Together

Professor Jones, like the self-attention mechanism, uses the collective reports (Q, K, V matrices) from these classrooms to piece together the meaning of the hieroglyphics:

Reports: Each classroom's report informs Jones on what to focus on, how symbols relate, and why they're significant.

Analysis: This is akin to computing attention scores where Jones might add notes to a chalkboard, drawing connections or arrows between symbols based on these reports.

Conclusion: Finally, he refines his understanding, much like updating hidden state vectors with contextual information, preparing for the next step of his adventure (or the model's next layer).

This process is the first step in LLMs, where self-attention mechanisms work within the sequence itself to understand the relationships between tokens. Only after this contextual awareness is established can the model move forward, akin to Indiana Jones now ready to step into the broader world to find the medallion, which corresponds to the FFN where external knowledge or predictions are applied.

In summary, just as Indiana Jones deciphers hieroglyphics through the insights of specialized classrooms, LLMs use self-attention to interpret the context of words, turning raw sequences into meaningful narratives. This process is fundamental, allowing the model to understand language intricacies before diving into broader knowledge (FFN) to predict outcomes or generate responses.