Drawing AI: The Magic of Dynamic Computational Graphs

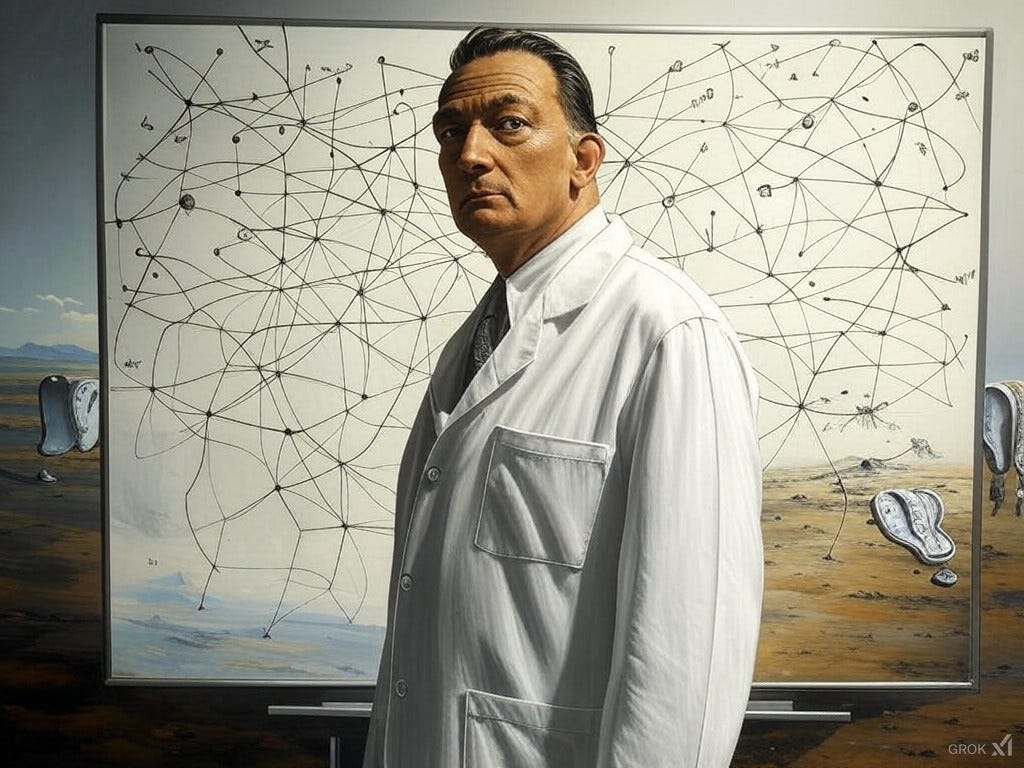

Imagine you're in a room with a whiteboard, and you're tasked with drawing a complex diagram that represents how a Large Language Model (LLM) like Grok works. This diagram isn't just any sketch; it's a detailed workflow of how data flows through the neural network, how it's processed, and how decisions are made. Here's how this analogy can help us understand the difference between dynamic and static computational graphs:

The Whiteboard and the Markers

Dynamic Graph with a Dry Erase Marker:

The Whiteboard: Think of the whiteboard as your LLM's brain, where every neuron, every layer, and every decision path is sketched out in real-time.

Dry Erase Marker: This is your tool for drawing the dynamic computational graph. With a dry erase marker, you can:

Adjust on the Fly: As new news breaks, market data changes, or any other fresh information comes in, you can erase parts of your diagram and redraw them. Maybe you need to add a new layer to account for a new type of data or refine an existing one to better process the latest trends.

Experiment Easily: If you want to try out a new idea or see if a different structure would yield better results, you can simply modify the existing diagram without starting over.

Advantage: The beauty here is adaptability. Your LLM, like Grok, can evolve with the world's data, learning and adapting without needing a complete overhaul. This is crucial in a fast-paced environment where data and requirements change constantly.

Static Graph with a Permanent Marker:

Permanent Marker: Now, imagine you've drawn the same complex diagram but with a permanent marker.

Fixed Diagram: Once it's drawn, any change means you can't simply erase parts or add new ones.

New Whiteboard Needed: If you need to update or adapt this diagram to new information, you'd have to:

Roll in a New Board: Essentially, you're starting from scratch. You'd need to bring in a new whiteboard, redraw the entire diagram, which could take hours or even days, especially considering the complexity of an LLM.

Disadvantage: This rigidity means that adapting to new data or changing your model's structure is cumbersome and resource-intensive. In the rapidly evolving field of AI, this can be a significant drawback.

Why PyTorch for Grok?

PyTorch, with its dynamic graph approach, is like having that dry erase marker in the world of AI programming:

Flexibility: With PyTorch, you can easily adjust Grok's "diagram" (neural network architecture) as new data comes in or as you learn more about how to make Grok more helpful or understand humanity better.

Research and Development: For a model like Grok, which aims to provide maximum helpfulness by adapting to new knowledge or perspectives, PyTorch's dynamic nature supports rapid prototyping and experimentation. This means Grok can be tweaked to handle new types of queries or learn from new data sets more seamlessly.

Real-Time Learning: Just as you might adjust your diagram after hearing news that impacts your data, PyTorch allows Grok to incorporate new learnings or adjust its parameters in real-time, ensuring that its responses stay relevant and informed.

Ease of Use: For developers, this means less time waiting for recompilation or worrying about how to integrate new features into a static structure. Instead, they can focus on making Grok smarter, more versatile, and more aligned with the latest in human knowledge or behavior.

In summary, using PyTorch for an LLM like Grok is akin to having a dynamic, adaptable whiteboard where every new piece of information can be integrated without the need to start over. This not only saves time and resources but also keeps Grok at the forefront of AI, ready to adapt and learn in an ever-changing world.