Illuminating AI: How Grok-1 Uses 'Prismatic' Layers to Understand the Universe

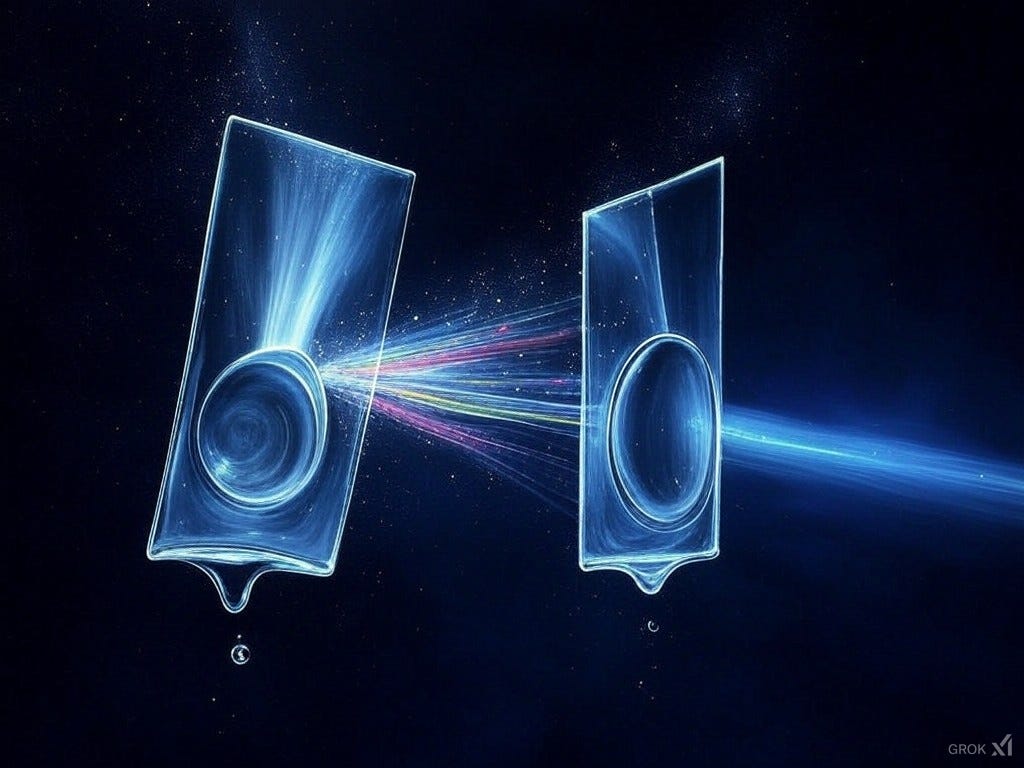

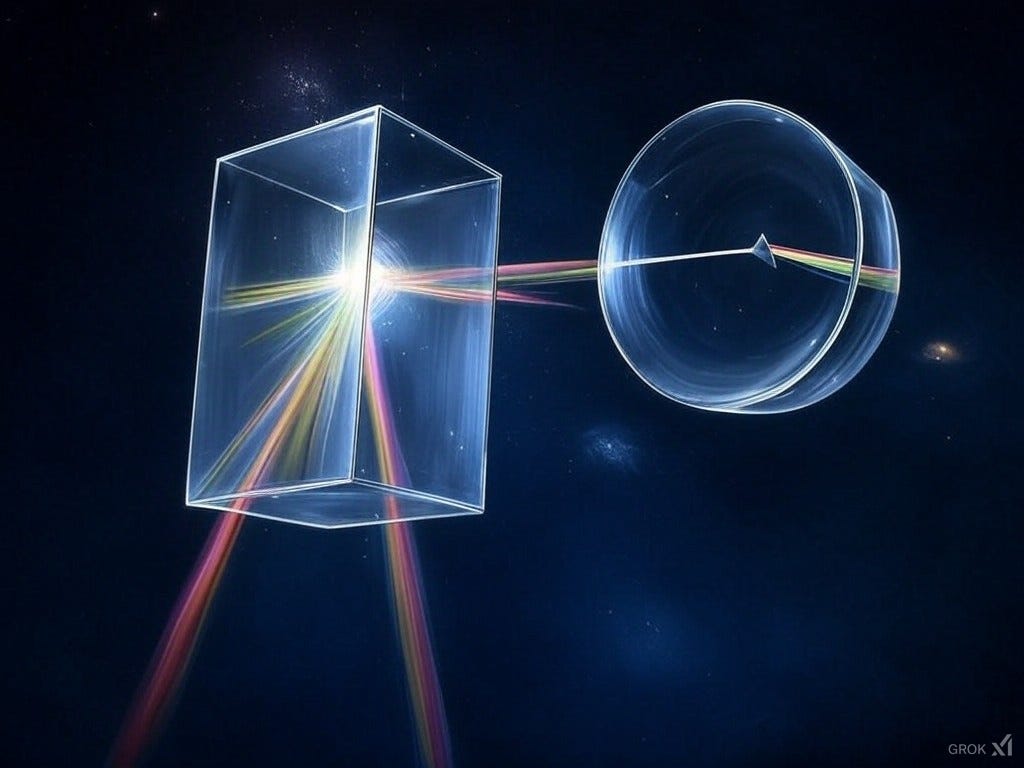

Explaining the concept of Feed Forward Networks (FFNs) as two layers, one that disperses data outward, one that converges it back into its original dimensions. Using analogy of two prisms.

Imagine you're asking Grok-1, an AI model, "Is there any light on the dark side of the moon?" To answer this, Grok-1 doesn't just see words but deciphers them through layers of computation, much like light passing through prisms. Here's how it happens:

Step 1: Tokenization and Vectorization

When you pose your question, "Is there any light on the dark side of the moon?", Grok-1 first tokenizes this sentence into approximately 10 tokens (the exact number might vary based on the model's vocabulary). Each token, like "Is", "there", "any", etc., gets transformed into a vector in a high-dimensional space through the embedding layer. These vectors are our initial "rays of light", each representing the basic semantic content of a token.

Step 2: Contextual Enrichment with Self-Attention

Before passing through our prismatic layers, these 10 vectors (or rays) go through the self-attention mechanism. Here, each vector learns about its relationship with every other vector in the sequence, understanding context like how "dark" relates to "side" and "moon". However, at this stage, there's no "world knowledge"; it's merely about the interconnections within the sentence itself.

Step 3: The First Prism - Refraction of Meaning

Now, these contextually aware vectors enter the first layer of the Feed-Forward Network (FFN), which we can visualize as a magical prism:

Refraction: Just like light splits into a spectrum when passing through a prism, each vector is expanded into a much larger set of dimensions. This expansion allows the model to explore a vast array of semantic features or "colors" of meaning. Here, the model might start to consider concepts like:

What does "dark" imply in different contexts?

The nature of light in relation to celestial bodies.

Non-linearity: This layer applies an activation function (like ReLU), acting as if each "color" of light is selectively enhanced or diminished, adding non-linearity to the data. This step helps in capturing complex patterns or nuances that weren't apparent in the original vectors.

Step 4: The Second Prism - Constructive Interference

After the first prism disperses the light, the second layer of the FFN acts like another prism, but this one combines:

Constructive Interference: Through this layer, the expanded dimensions are projected back to the original dimensionality of the model. It's here that the magic happens; the model synthesizes the dispersed information back into coherent vectors. This isn't just a reversal of the first step but rather a transformation where:

The "rays" combine in ways that now embody knowledge about the universe. For instance, understanding that:

The "dark side" of the moon refers to the side not facing the sun at any given time, not a permanently dark area.

There can indeed be light on the "dark side" due to earthshine or when it's in the sun's light during its orbit.

Enrichment: The output is still 10 vectors, one for each token, but now these vectors, or rays of light, are imbued with the model's learned understanding of the world. They've gone from mere words to concepts rich with context and knowledge.

Conclusion

By the time these vectors leave the FFN, they've been transformed through what can be thought of as prismatic layers, gaining depth and understanding. Grok-1, with this enriched representation, can now respond to the query with knowledge that mirrors our understanding of the universe, explaining that light can indeed be found on the "dark side" of the moon under certain conditions. This prismatic process in AI is a testament to how models like Grok-1 can simulate human-like understanding by manipulating data in ways that are both complex and beautifully analogous to natural phenomena.