The Emergence of Intelligence in LLMs via Complex Interactions of Weights

How weights (parameters) in LLMs are individually meaningless, but collectively across many layers of the neural network, give rise to intelligent output.

Large Language Models (LLMs) have captured our fascination with their ability to mimic human language. But what powers these models? At their core are concepts like weights and backpropagation, which are pivotal to their learning process. Let's dive into these through an imaginative analogy: envisioning a complex machine.

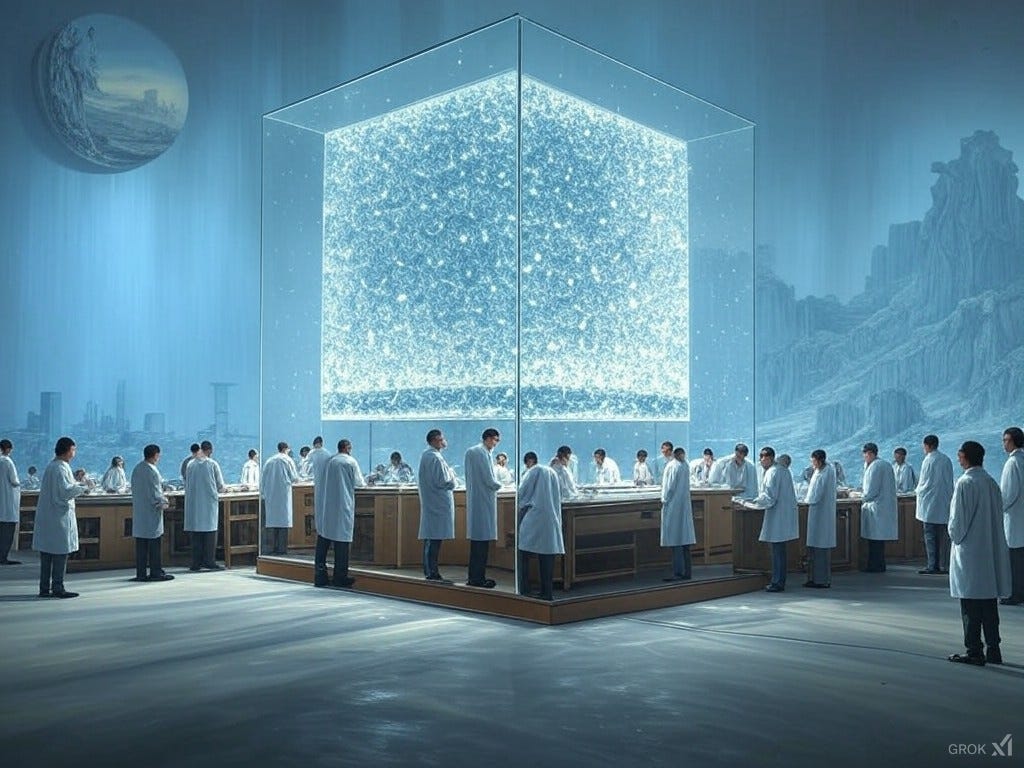

The Machine Analogy: A Cube of Intelligence

Imagine a colossal glass cube, filled with a unique gel. Suspended within this gel are billions of tiny metallic reflectors, each one centimeter apart. These reflectors represent weights in our neural network, each with a unique orientation that dictates how incoming light will be altered.

Light as Information: The rays of light entering this cube symbolize hidden state vectors in an LLM, the mathematical representation of words from the input text (prompt).

The Journey Through the Gel: As light travels through this medium, it interacts with each reflector. However, the gel itself isn't just a passive holder. It acts like activation functions, capable of amplifying or suppressing the light rays, introducing non-linearity to the path. This is akin to how activation functions in neural networks transform inputs, allowing the model to capture complex patterns.

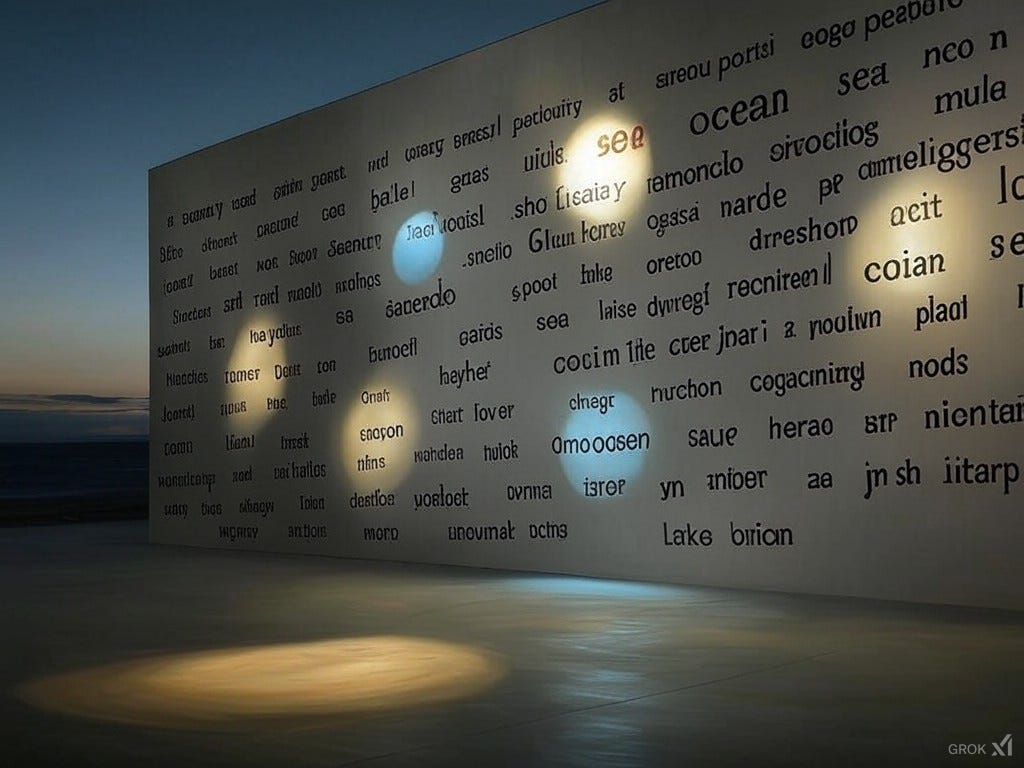

The Output Wall: At the cube's end, there's a wall displaying every English word. When light exits, it illuminates words, with brightness indicating the probability of selection.

Understanding Backpropagation: Tuning the Reflectors

Here's where learning, or backpropagation, comes into play:

The Feedback Mechanism: When the light doesn't illuminate the expected words, we start adjusting the reflectors using what we'll call "electromagnetic waves".

Adjusting the Reflectors: These waves, representing gradients of the loss function, move backward from the output wall to each reflector. They gently tweak each reflector's orientation based on how much it contributed to the mismatch between prediction and reality. This is gradient descent, where we calculate how to adjust each weight to reduce future errors.

From Output to Input: The process begins at the back, where errors are most visible, and works its way to the front, refining the entire path of light.

Iterative Refinement: This adjustment doesn't occur once but over many epochs, with each pass through the data refining the reflectors' orientations, much like training an LLM over numerous iterations to improve its accuracy.

Emergence in Complex Systems: The Collective Intelligence

Now, let's explore how this system gives rise to intelligence through emergence:

The Power of the Collective: Individually, no reflector holds intelligence; it's just metal with an orientation. But together, they create a system where complex behavior emerges.

Emergent Intelligence: This collective interaction results in a behavior that can't be predicted by examining one or even a few reflectors. The system, as a whole, processes, understands, and generates language, showcasing how simple rules lead to complex, unpredictable outcomes.

Unpredictable Patterns: Like in other complex systems, such as ant colonies or the human brain, the interactions of these simple components (weights) over vast datasets lead to emergent capabilities.

Predictability and Complexity

Beyond Individual Weights: Analyzing weights in isolation doesn't reveal much about the model's behavior. This mirrors how in our cube, understanding one or a few reflectors won't help predict the light pattern on the output wall.

The Complexity of Emergence: The true intelligence of an LLM comes from how all these weights interact, much like focusing a telescope where each adjustment to a lens (reflector) contributes to the final, focused image.

Additional Considerations

Regularization: To prevent reflectors from aligning too uniformly or chaotically, imagine "stabilizing fields" or gel viscosity adjustments. This represents regularization in neural networks, which helps prevent overfitting by ensuring a balanced learning process.

Bias: Think of biases as smaller, fixed reflectors that subtly shift the light's path before it hits the main reflectors, adding another layer of fine-tuning to the model's output.

Real-World Implications

This understanding isn't just academic; it underpins applications from language translation to ethical considerations in AI, where emergent behaviors can lead to unforeseen outcomes.

Relating to Other Complex Systems

Just as in natural systems where simple rules lead to complex behaviors, LLMs exemplify how basic interactions can result in sophisticated intelligence.

Conclusion

Through this analogy of a glass cube with metallic reflectors, we've explored how weights and backpropagation in LLMs work to create emergent intelligence. The reflectors, simple in isolation, together form a system where complexity and intelligence arise from simple rules, much like the wonders of natural complex systems.

Glossary:

Epoch: One complete pass through the entire training dataset.

Gradient: The slope of the loss function, indicating how to adjust weights.

Loss Function: Measures how far off predictions are from actual results.

Activation Function: Introduces non-linearity into the output of a neuron, enabling complex representations.