The Profound Role of 6,144 in Grok-1 - A Deep Dive into Neurons, Weights, and Dimensions

Explaining the relationship among dimensions of the hidden state vectors, the weights of neurons, and the number of neurons in each layer of the neural network.

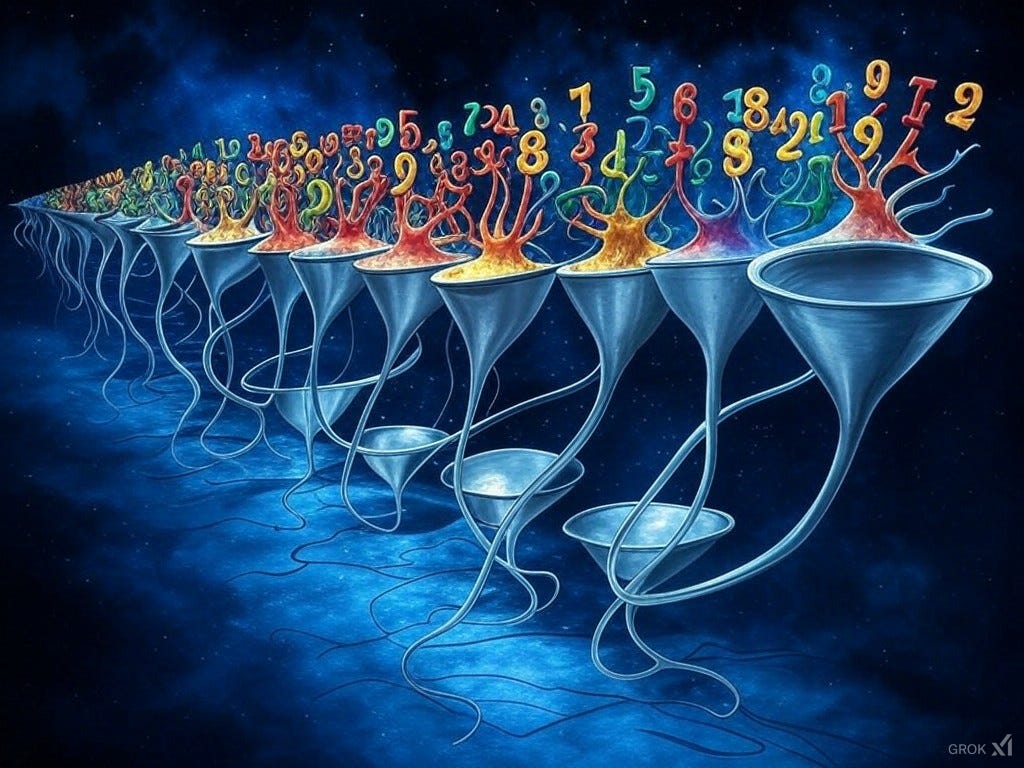

The Essence of a Neuron

In the realm of neural networks, particularly in advanced models like Grok-1, the neuron is the elemental unit of computation. Here's a closer look at how it operates:

Input Vector: Each neuron accepts a vector as its input. This vector might represent a token's hidden state in language models or any feature set in other applications. In Grok-1, this vector has a special dimension: 6,144 elements. This number encapsulates the current understanding or context of the data being processed.

The Neuron's Intelligence - Weights:

Every neuron possesses its unique set of weights. These weights are like the neuron's DNA, determining how it interprets the input vector. Critically, the number of weights in each neuron must precisely match the number of elements in the input vector - in this case, 6,144 weights per neuron. This ensures that each dimension of the input is considered in the neuron's computation.

Processing and Output:

The neuron processes its input by performing a weighted sum, where each element of the input vector is multiplied by its corresponding weight, and then a bias is added. This sum is then often passed through an activation function, which introduces non-linearity into the model.

The result is a single scalar value. This scalar is the neuron's interpretation or transformation of the input vector, condensing potentially complex information into a single number.

The Significance of 6,144 in Grok-1

Hidden State Vectors: The choice of 6,144 as the dimension for hidden state vectors in Grok-1 is not arbitrary. It allows for a high-dimensional representation of tokens, capturing intricate linguistic nuances or contextual relationships.

Neuron Weights: With each neuron having 6,144 weights, the model ensures that every aspect of the input data is considered, providing a comprehensive analysis at each computation step.

Layers of Neurons

Constructing the Output Vector:

When neurons are organized into layers, each neuron contributes its scalar output. Collectively, these scalars form a new vector. If there are 6,144 neurons in a layer, then the output vector will naturally have 6,144 elements, maintaining the input's dimensionality.

Consistency Through Layers: As data flows through Grok-1, this consistency in vector size (6,144 elements) across layers ensures that information is processed with a uniform complexity, allowing for coherent learning and inference.

The Feed-Forward Network (FFN) - An Exception to the Rule

Expansion in the First Layer:

Here, the FFN does something unique. It takes the 6,144-dimensional input and expands it into a much larger space. With 24,576 neurons in this first layer, each still dealing with 6,144 weights, the network explores a higher-dimensional space for deeper feature interaction. This step is crucial for capturing complex patterns that might not be evident in lower dimensions.

Projection Back in the Second Layer:

After expanding, the second layer of the FFN brings the representation back to the original dimension. With 6,144 neurons again, each now processing the 24,576-dimensional output from the first layer, the network compresses this richer understanding back into the familiar 6,144-dimensional space. This ensures that the output of the FFN matches the expected input for subsequent operations or the model's final output.

The Role of 6,144 in FFN: Even in this expansion and contraction, the number 6,144 serves as an anchor, ensuring that the model can delve into complexity without losing the ability to communicate back to the rest of the network in its standard language.

Conclusion

The number 6,144 in Grok-1 is more than just a dimension; it's a testament to the careful engineering of neural network architecture. It's at the heart of how each neuron processes information, how layers maintain consistency, and how the FFN navigates between complexity and simplicity. This number underscores the balance Grok-1 strikes between capturing the nuanced, multi-dimensional nature of language and other data while ensuring computational efficiency and coherence across its operations. Through the lens of neurons, weights, and dimensions, we see 6,144 as a key to understanding Grok-1's intelligence.