Understanding Gradients in AI Model Training: The Grok Example

When you're training an AI model like Grok, you're essentially teaching it to make better predictions or decisions. But how does this learning happen? Enter the concept of the gradient, a fundamental idea in machine learning that helps models like Grok improve their performance. Let's break this down into simple, understandable terms.

What is a Gradient?

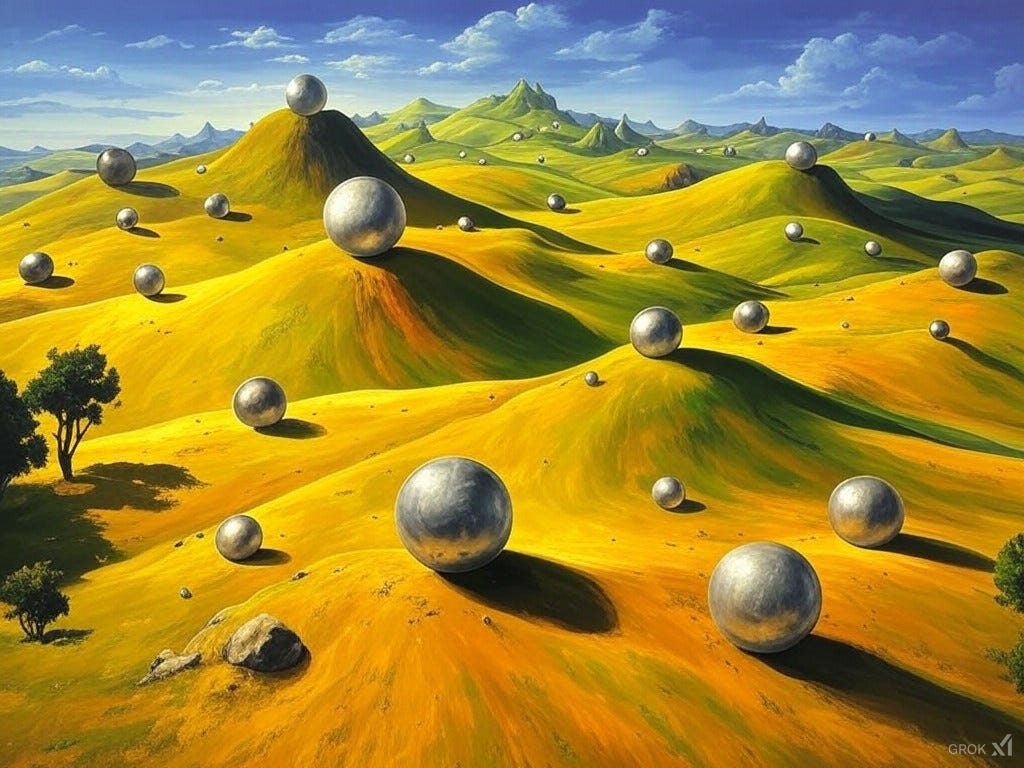

Imagine you're standing on a hilly landscape, and your goal is to reach the lowest point as quickly as possible. The gradient at any point on this hill tells you the direction in which the ground slopes downward most steeply, and how steep that slope is. In the realm of AI:

Gradient is like a guide telling the model which way to adjust its settings (parameters) to reduce its errors.

The Loss Function

First, let's talk about what we're trying to minimize:

Loss Function: This is a mathematical way to measure how wrong the model's predictions are. Think of it as a scorecard where lower scores mean better performance. If Grok predicts the weather and it's off by a lot, the loss function would give a high score (bad). If it's spot on, the score would be low (good).

Partial Derivatives: A Piece of the Puzzle

Now, here's where it gets a bit mathy but conceptually simple:

Partial Derivative: Imagine you have a complex recipe with many ingredients. If you want to know how changing one ingredient affects the taste, you focus only on that ingredient while keeping others constant. A partial derivative does exactly this for functions of multiple variables. It tells you how changing one variable (while keeping others constant) affects the overall result.

In our AI context, the loss function depends on many variables (the model's parameters), and we want to know how changing each parameter affects the loss.

Parameters of the Model

Parameters: These are the "knobs and dials" of the model that it learns to adjust during training. For Grok, these might include weights that determine how much influence each piece of information has when making a prediction.

Gradients as Vectors

Gradients as Vectors: The gradient isn't just a single number; it's a vector for each parameter, giving both a direction and a magnitude:

Direction: This is where you need to move the parameter to decrease the loss. If moving a parameter up increases the loss, then the gradient points down for that parameter.

Magnitude: This tells you how quickly the loss changes with movement in that direction. A large magnitude means a small tweak in the parameter can lead to a big change in loss.

Here's a simple conceptual equation to visualize:

Where w_1, w_2, . . ., w_n are parameters of the model. This vector points in the direction of the steepest descent (the quickest way down the hill) for all parameters simultaneously.

How Gradients Help in Training

Gradient Descent: This is the method used to update these parameters. The model looks at the gradient to know which way to "step" next. By continuously taking steps in the direction of the negative gradient, the model moves towards lower loss, learning from its mistakes.

Conclusion

Gradients are the heart of how models like Grok learn. They provide a map for navigating the complex landscape of machine learning, guiding the model towards better performance by showing it how to adjust each parameter to minimize its errors. While the math can get intricate, at its core, the gradient is about finding the most efficient way down to the best solution, making AI not just smarter but also more precise with every training step.